Our Lives, Our Stories: Legacy of the Randolph Site Part 2: Animating the past

In the first part of this two-part blog we looked at the important reasons for filming at the Peyton Randolph House, to highlight the voices and lives of the enslaved individuals who lived at the house. The lives and stories of these 27 people have never been fully told in the place where they lived and worked together. In this second blog we will look at the technology we used to help capture these lives for the tour.

Before we even begin to film the actors, our colleagues from department of Architectural Preservation and Research arrive early in the morning to stage the rooms. Using historic documents and primary sources, they identify the way rooms were used and what the rooms would have looked like when they were furnished. This important work forms the backdrop to the experience of the 360-degree tour.

360-degree tours are developed in software called 3D Vista. This software brings photographs together and connects them in a seamless experience that flows as if you are actually moving through the building. The tour is created from multiple 360-degree photos linked together with ‘hotspots’—the white circle that you will see as you move around the tour. The 360-degree images are taken using a tripod-mounted Insta360 One X2 camera. This is a small camera similar in size to your smartphone. When we take the photos, all members of the team have to be out of the frame, removed from the view of the lenses. Lenses, plural you ask? Yes, each camera has two ‘fish-eye’ lenses, one either side of the main body. These work like a fish’s eyes too, allowing everything in line of sight of the camera to be seen and recorded.

As the camera takes a picture in 360 degrees this means there is no “back of the camera” to hide behind. So, when we take photos the crew have to move to the next room. But being out of the room means we have to run the camera without touching it. This is resolved by connecting the camera to a standard smart phone; everything can then be managed in real-time from a remote location. This kind of 360-degree image forms the basis of all the virtual tours you have seen previously on our website.

When you look at the photos from the 360-degree camera, the result seems oddly distorted (above). The images are called equirectangular, and are basically spherical images unfolded onto a 2D rectangular plane. The image looks warped in a 2D image, but when seen through the 360 degree software it turns into a sphere. This means you can look all around the room like you were standing where the camera was.

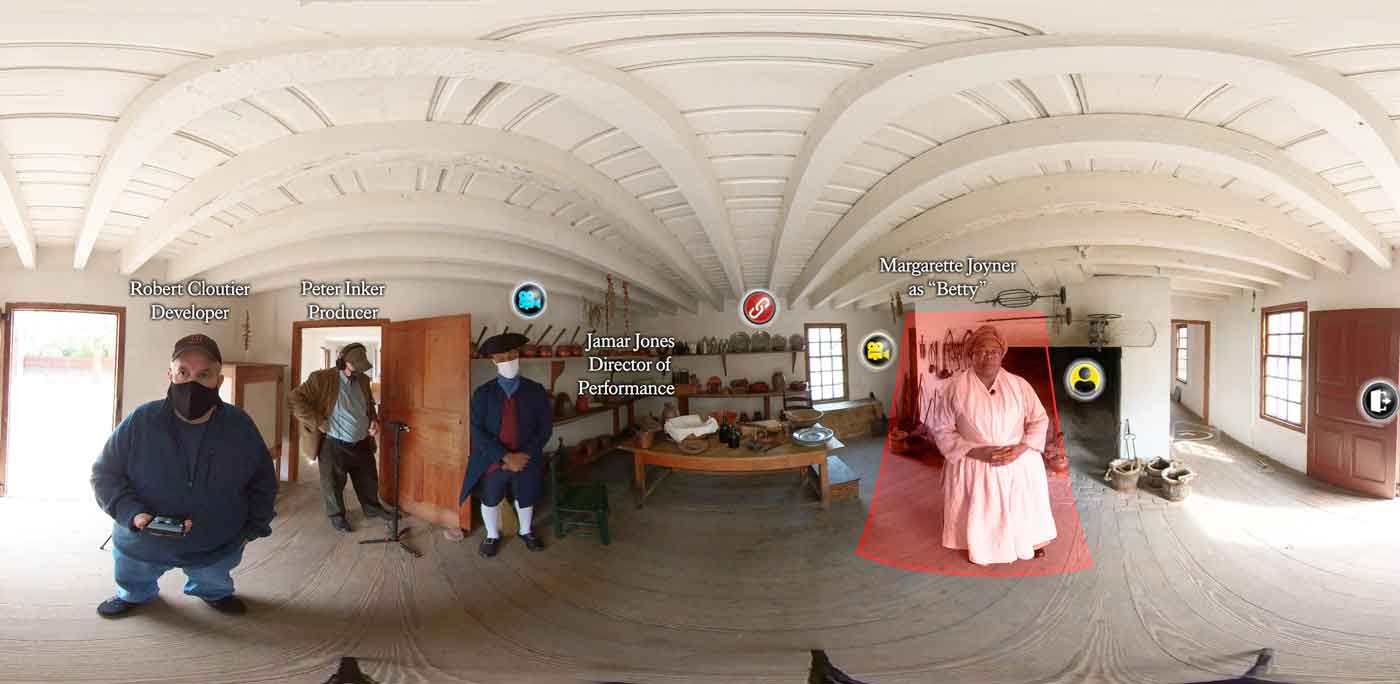

For the live action 360-degree filming we used the same camera, running it this time in video mode. The camera is set up and first takes a picture of the room in a still image. Next, the actors are brought in and the film shoot is set up with the camera in exactly the same place. When we film, we are using the 360-degree camera, and even though it records 360 degrees the crew stay in the room during the shoot. The filming captures everyone in the room, but this is not a problem because during post-production we use only the area of the film around the actor and add it to the 360-degree still image that forms the tour. You can see this in the image below colored red for clarity. Along with the filming we also set up audio recording equipment, specifically a Comica Boom X-D D1 Lavalier microphone connected to a Zoom H6 six channel digital audio recorder.

Alongside the 360-degree filming, we also take separate standard 2D videos using a Sony a6100 camera with 4K video quality. These videos are not embedded into the 360-degree tour, but are opened in a new window when clicking on one of the camera icons that can be found in the tour. The reason for this is simple: the 360-degree camera captures the 3D quality of the performance, but subtitles cannot be added to that video. In order to ensure the maximum accessibility for our audience, we took the standard 2D video and played it back in a tour window through our YouTube account which allows subtitles to be added. This means people who are deaf or hard of hearing can easily understand what the actors are saying.

I hope you enjoy this new 360-degree tour. For everyone involved this was an exciting project. Being able to capture a glimpse of the people who lived at the Randolph site was fulfilling for all of us. For probably the first time, all 27 enslaved people at the Randolph site have had their stories told simultaneously. It’s also a great opportunity for you to experience just a small sampling of the work our highly skilled actors and interpreters do for the Colonial Williamsburg Foundation.

Rob Cloutier is the Colonial Williamsburg 3D visualization fellow for 2019. He is the owner of Digital History Studios, and has been a 3D cinematic animator for the past 25+ years, 12 of which had been in the computer gaming field. His website is https://www.3dhistory.com/ .

Dr. Peter Inker is the Director of the Historical Research and Digital History Department. He is passionate about the past and living history museums. Originally from Wales, UK, he now lives permanently in Virginia.

This project was funded in part by a generous grant from the National Endowment for the Humanities.

Colonial Williamsburg is the largest living history museum in the world. Witness history brought to life on the charming streets of the colonial capital and explore our newly expanded and updated Art Museums of Colonial Williamsburg, featuring the nation’s premier folk art collection, plus the best in British and American fine and decorative arts from 1670–1840. Check out sales and special offers and our Official Colonial Williamsburg Hotels to plan your visit.